I gave a guest lecture in Hamel and Shreya’s AI Evals for Engineers and PMs course (my slides).

It was a ton of fun, and I really apprecate the opportunity to share my experience with the students.

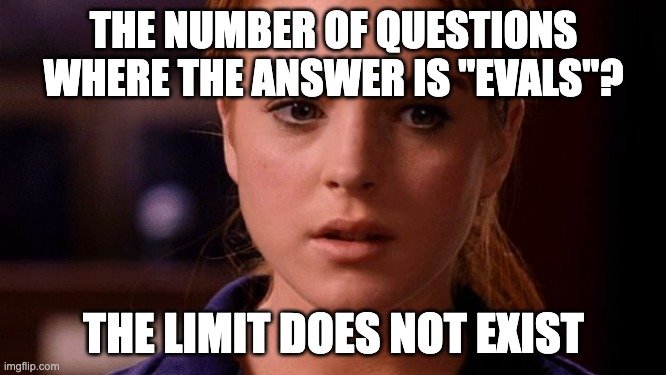

One thing struck me. Every single person is asking the same kind of questions:

- “Should I use late interaction?”

- “What’s the best way to chunk my documents?”

- “How do I know if my information extraction is working”

- “Which embedding model should I use?”

The funny thing is: the answer is always evals.

For the purposes of this post, when I say “eval” I mean: any process that measures the performance of an AI system along some dimension(s). This is a broad definition which includes e.g. error analysis, human evaluations, and more.

People Don’t Want to Learn to Fish. They Just Want the Fish.

Jason Liu often says his experience has shown him that people don’t want to learn to fish. They just want the fish.

I think this is a common problem in the AI space. People want the answer, they don’t want to learn how to get the answer. You can take courses from Hamel, Shreya, Jason, Hugo and many others…

But if you don’t go out and fish, it won’t matter.

There’s a certain nirvana to some wise person just telling you what to do.

There are too many decisions to make and too many ways to make them.

- What model should I use? Open weight?

- Fine-tuned? Which provider? Which inference engine?

- What chunking strategy should I use?

- What embedding model should I use?

- What retriever should I use?

- … and so many more

We would prefer to be like Reese from Malcolm in the Middle, who learns that the way to live is to stop thinking:

But we’re not Reese. And we can’t be.

The Devil in the Details

or: Why We Can’t Have Nice Things

Traditional software systems are more predictable than AI systems. A hotel booking system is going to work mostly the same way. As long as you consider how many users, how many hotels, latency, cost, etc. You can make good decisions about technologies to select. You generally are not asking “how often will booking the room fail?” Or if you are, you’re talking about > 99% reliability.

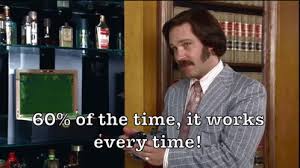

AI systems have the same cost, scale, and latency considerations as traditional software systems. But with AI systems, < 90% reliability is common. The fact that AI systems are stochastic (i.e. do not always return the same answer) means that you can’t just say “I’ll use X” and be done with it. Reliability is always a consideration. And usually the bottleneck.

The definition of “reliable” is inherently domain specific:

- for a hotel booking system, the question is “how often will the user not be able to book a room?”

- for a chatbot, the question is “how often will the user not be able to get the answer they want?”

- for a search engine, the question is “how often will the user not be able to find the information they want?”

- for a coding agent, the question is “how often will the user not be able to get the code they want?”

- … etc

And the reality is even more complicated. Because it’s never “reliability for my domain for all people”. It’s “reliability for my domain for my users with my/their data.” The end result is that I cannot tell you a priori what you should do. I can only teach you how to fish.

A Tale of Two Chatbots

Two clients I recently worked with both needed help building customer support chatbots.

- A developer tooling company with SDKs and APIs for their products

- A hardware store with a catalog of products with structured specifications

Both clients had a similar problem: they needed to build a chatbot to help their customers with their products. So we can use the same architecture and approach, right? Right?

You might imagine that the best way to start is:

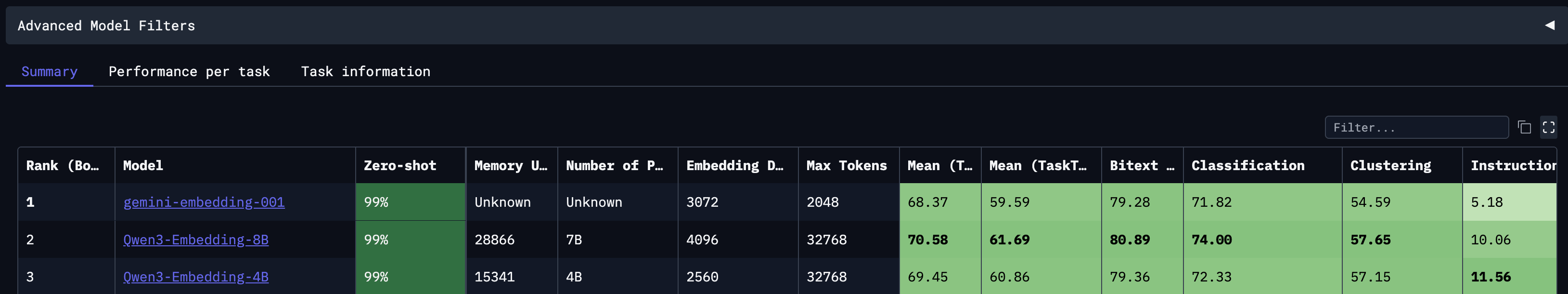

- Pick an embedding / retrieval approach that performs best on MTEB

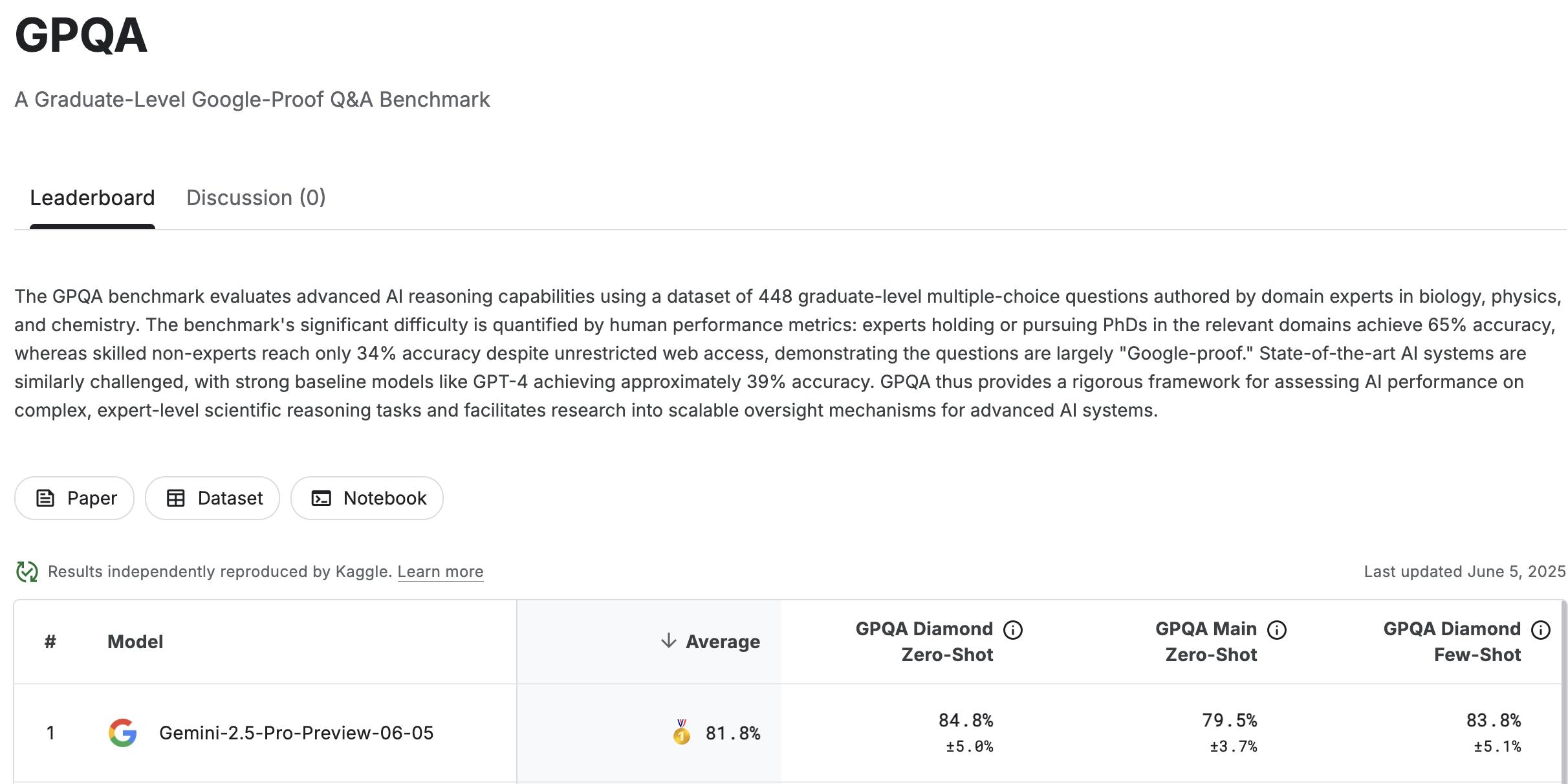

- Pick a model that performs well on GPQA

- Dust off our hands and call it a day

But this is a mistake.

- These benchmarks are not representative of the problems we want to solve for the users and data we want to solve them for.

- These benchmarks do not reflect the total cost of ownership

With the hardware store client, BM25 was sufficient. Queries were often only about 1-2 products; and often had a name. There weren’t really that many products (~30k), had mostly structured information, and products were rarely updated. A static sqlite database with bm25 extension, shipped with the app was great.

With the developer tooling company, we had less consistency in language used in their queries, so purely lexical search didn’t cut it. Late interaction actually worked fairly well here; but at the time the support for multi-vector indexing wasn’t quite there in many offerings, so it wasn’t a great fit in terms of complexity. So we implemented hybrid search.

The Only Playbook: Evals

The only playbook I can confidently give you is: run evals.

- Observe what is happening in the system

- Diagnose what is going wrong

- Improve the system

When you have this process in place, you develop a superpower.

Every “should I do X?” question is answered with “let’s run an eval and find out”.

You will know

- what model to use

- what chunking strategy to use

- what embedding model to use

- what retriever to use

- … and everything you need to know to make a decision.

But you will have to fish.

Because the answer is always evals.

Want to Learn How to Build Evals for your team?

If you want to learn the skills but do it yourself…

If you want a more hands-on done-with-you approach…