Picture this: A data scientist spends hours configuring YAML files, debugging pipeline errors, and switching between five different tool UIs just to test a simple feature idea. Meanwhile, an analyst in Excel has already cleaned their data, built a model, and presented insights to stakeholders. Something’s wrong with this picture – and it’s not the analyst using Excel.

Why Data Science is a “Wicked Problem”

Data science challenges share key characteristics with what design theorists call “wicked problems”:

- The solution shapes the problem: How we model data influences what solutions we can discover

- Multiple stakeholder perspectives: Each role brings different priorities and constraints

- Evolving constraints: Requirements and resources change as understanding deepens

- Never truly finished: Solutions require continuous refinement and adaptation

This isn’t just academic theory – it explains why our current tooling often falls short. We’ve optimized for production stability while sacrificing the iterative nature of data science work.

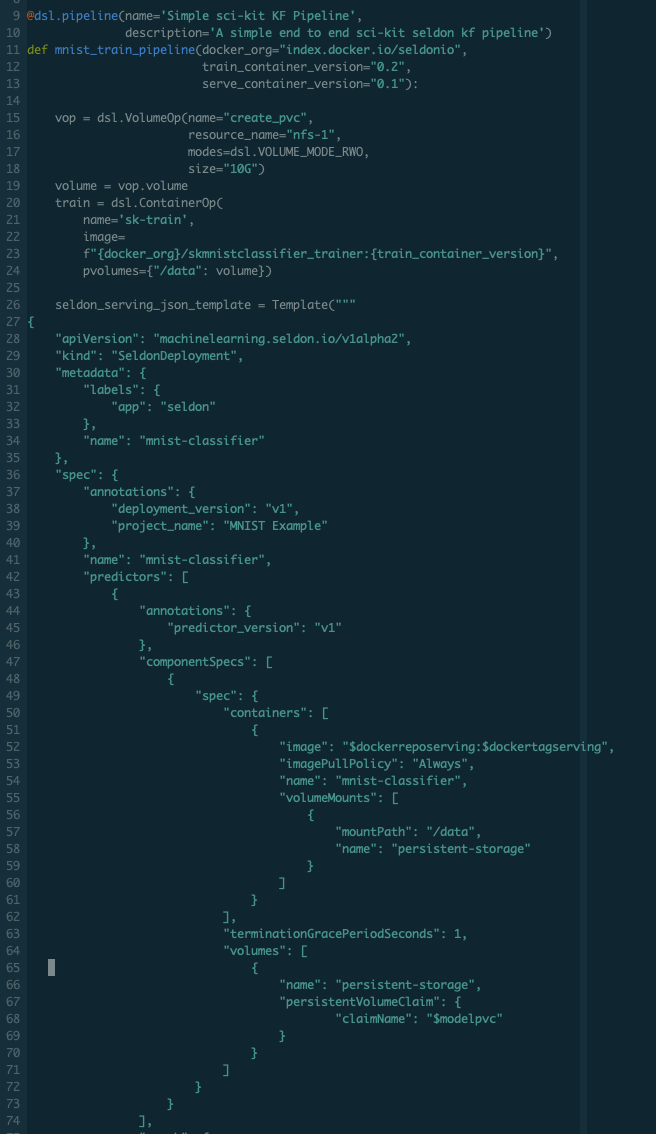

Consider this example of modern ML infrastructure:

Is this really how data scientists want to work?

Is this really how data scientists want to work?

What Makes Excel Work (Despite Its Flaws)

Excel has serious limitations:

- Limited scale

- Poor version control

- Lack of audit trails

- Potential stability issues

Yet people keep using it. As Gavin Mendel Gleason aptly notes:

“People refuse to stop using Excel because it empowers them and they simply don’t want to be disempowered.”

Excel succeeds because it provides two critical capabilities:

- Iterability: Users can rapidly experiment, seeing results immediately

- Accessibility: Anyone can view and work with the data at their skill level

Excel succeeds because it prioritizes solving the essential problem (understanding data) over accidental complexity (infrastructure concerns).

Building Better Data Science Tools

To create better tools for data science, we need to learn from Excel’s success while addressing its limitations. Future tools should:

1. Embrace Accessibility Through Layered APIs

- Provide simple interfaces for basic operations

- Enable progressive complexity for advanced users

- Support different user roles and skill levels

- Example: FastAI’s layered API approach, allowing both high-level and low-level control

2. Enable Rapid Iteration

- Minimize context switching between tools

- Provide immediate feedback on changes

- Allow experimentation without heavy reconfiguration

- Example: Databricks’ notebook-centric workflow that scales to production

3. Meet Users Where They Are

- Build SDK-first instead of UI-first

- Integrate with existing workflows (notebooks, CLI, etc.)

- Support multiple interfaces for different use cases

- Example: Netflix’s Papermill for productionizing notebooks

4. Focus on Essential Problems

- Solve data understanding challenges first

- Handle infrastructure concerns behind the scenes

- Provide sensible defaults with room for customization

- Example: Modern data warehouses abstracting away distribution complexity

The Path Forward

The future of data science tooling isn’t about adding more infrastructure – it’s about making complex infrastructure invisible while empowering users to solve real problems. We need tools that:

- Scale with complexity: Start simple, but support sophisticated use cases

- Enable collaboration: Make it easy for different roles to contribute

- Promote iteration: Support rapid experimentation and refinement

- Abstract wisely: Hide unnecessary complexity while maintaining power

Key Takeaways for Tool Builders and Users

For tool builders:

- Prioritize user workflow over infrastructure

- Build layered interfaces that grow with users

- Focus on reducing cognitive overhead

For practitioners:

- Evaluate tools based on iteration speed

- Look for solutions that scale with your needs

- Don’t accept unnecessary complexity

Data science will always involve complex problems. But our tools should help us tackle that essential complexity rather than adding accidental complexity. The next generation of data science tools must learn from Excel’s strengths while overcoming its limitations.

Remember: The best tool isn’t always the most sophisticated – it’s the one that helps you solve real problems most effectively.

Is Your Data Wicked?

If this post resonated with you, I would love to chat with you. Schedule a free consult to talk about how to tame your wicked data.

Credit:

Credit: