The Power of Semantically-Meaningful and Human-Readable Representations

As AI and data science leaders, we often focus on sophisticated algorithms and cutting-edge technologies. However, one fundamental yet frequently overlooked aspect can make or break your AI initiatives: how your data is represented. Let me share a practical guide that will transform how your team works with data. We’ll be using a dataset on obesity risk factors, and we’ll see how different approaches to data representation can lead to vastly different results.

Understanding the Dataset: Obesity Risk Factors

Before diving into the technical details, let’s understand the importance of our example dataset. Obesity is a significant global health challenge:

- Over 2 billion people worldwide are currently obese or overweight

- It’s classified as a serious chronic disease with both genetic and environmental factors

- The condition involves complex interactions between social, psychological, and dietary habits

- Research shows obesity can be prevented with proper intervention

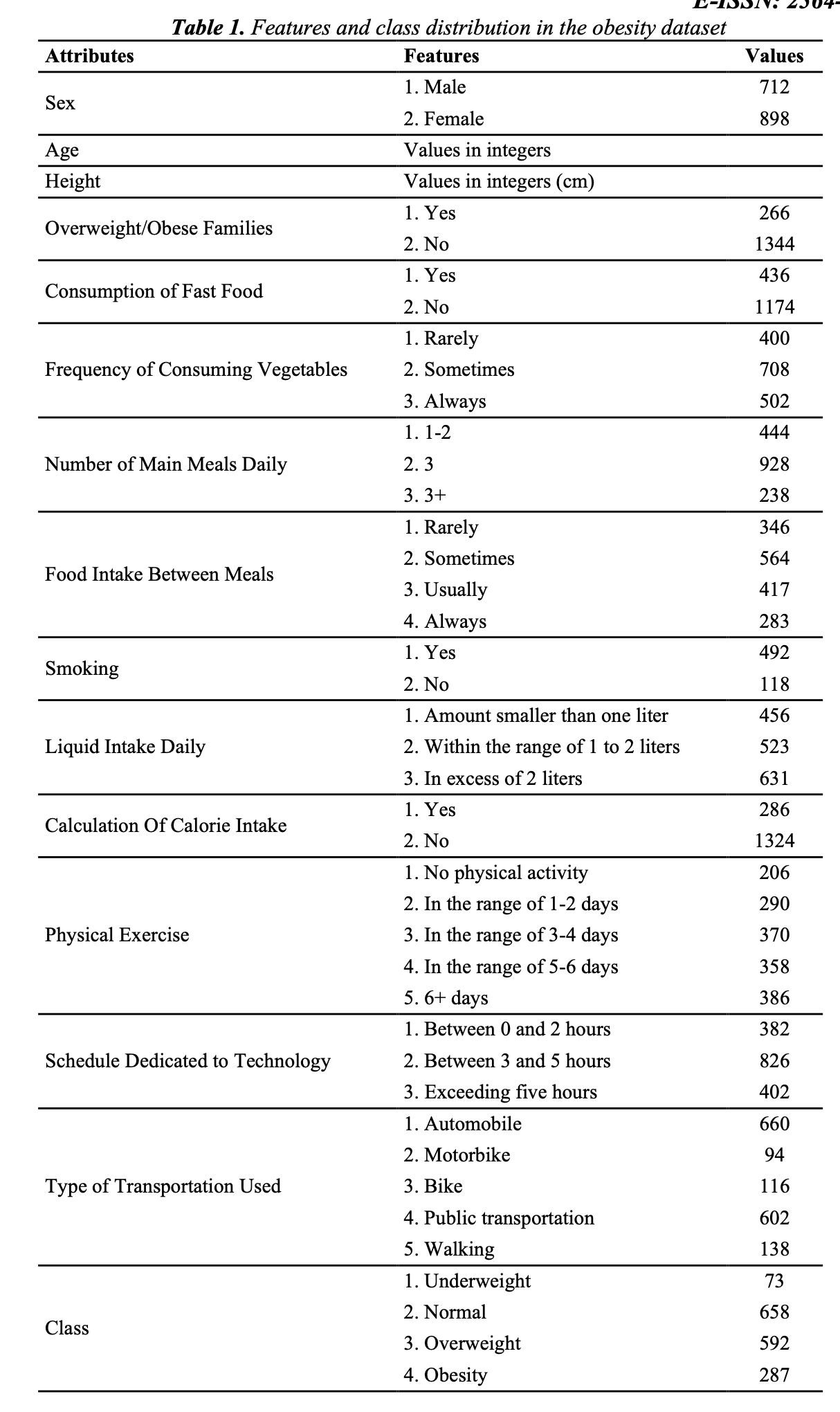

The dataset we’ll be working with comes from a comprehensive study aimed at identifying individuals at risk of obesity. It was created through an online survey of 1,610 individuals, collecting various lifestyle and health-related factors. This makes it an excellent example of real-world data that requires careful representation to be useful for analysis and modeling. But the encoding of the data is not completely clear.

Key features in the dataset include:

- Demographic information (age, sex)

- Physical measurements (height)

- Family history

- Dietary habits (fast food consumption, vegetable intake)

- Lifestyle factors (physical activity, technology usage)

- Health behaviors (smoking, liquid intake)

The Dataset Source

The dataset is available on Kaggle: Obesity Dataset on Kaggle

This dataset is particularly interesting because it:

- Contains a mix of categorical and numerical data

- Includes various encoding schemes that need transformation

- Represents real-world health data collection practices

- Demonstrates common data representation challenges

Why This Dataset Matters for Data Representation

The obesity dataset perfectly illustrates common challenges in data science:

- Mixed Data Types

- Numerical measurements (height, age)

- Categorical values (exercise frequency, meal patterns)

- Binary indicators (family history, fast food consumption)

- Encoding Complexity

- Different scales for different features

- Non-intuitive numeric codes for categorical data

- Implicit meaning in numeric values

- Real-World Relevance

- Healthcare data often uses similar encoding patterns

- Survey data typically requires significant transformation

- Public health applications need clear interpretation

The Hidden Cost of Poor Data Representation

Picture this: Your data scientist is staring at a dataset that looks like this:

import pandas as pd

df = pd.read_excel('Obesity_Dataset.xlsx')

print(df.head())

Sex Age Height Overweight_Obese_Family Consumption_of_Fast_Food

0 2 18 155 2 2

1 2 18 158 2 2

2 2 18 159 2 2

3 2 18 162 2 2

4 2 18 165 2 1

Fortunately, the dataset includes a data dictionary that can help us understand the encoding of the data.

Implementation Approach 1: Data Dictionary Mapping

First, let’s look at the traditional approach using a data dictionary:

data_dict = {

"Sex": {

1: "Male",

2: "Female"

},

"Overweight_Obese_Family": {

1: "Yes",

2: "No"

},

"Consumption_of_Fast_Food": {

1: "Yes",

2: "No"

},

"Frequency_of_Consuming_Vegetables": {

1: "Rarely",

2: "Sometimes",

3: "Always"

},

"Number_of_Main_Meals_Daily": {

1: "1-2 meals",

2: "3 meals",

3: "3+ meals"

},

"Food_Intake_Between_Meals": {

1: "Rarely",

2: "Sometimes",

3: "Usually",

4: "Always"

},

"Liquid_Intake_Daily": {

1: "Less than 1 liter",

2: "1-2 liters",

3: "More than 2 liters"

},

"Physical_Exercise": {

1: "No physical activity",

2: "1-2 days",

3: "2-4 days",

4: "4-5 days",

5: "6+ days"

},

"Schedule_Dedicated_to_Technology": {

1: "0-2 hours",

2: "3-5 hours",

3: "More than 5 hours"

}

}

def transform_with_dictionary(df):

better_df = df.copy()

# Fix common issues like misspellings and add units

better_df.rename(columns={

'Physical_Excercise': 'Physical_Exercise',

'Height': 'Height_cm',

'Age': 'Age_years',

}, inplace=True)

# Transform values using the data dictionary

for feature, mapping in data_dict.items():

if feature in better_df.columns:

better_df[feature] = better_df[feature].map(

lambda x: mapping.get(int(x), x)

)

return better_df

Implementation Approach 2: Using Type-Safe Classes

A more robust approach is to use type-safe classes to represent our data:

from dataclasses import dataclass

from enum import Enum

from typing import Optional

class Sex(Enum):

MALE = 1

FEMALE = 2

class FrequencyLevel(Enum):

RARELY = 1

SOMETIMES = 2

USUALLY = 3

ALWAYS = 4

class ExerciseFrequency(Enum):

NONE = 1

ONE_TO_TWO_DAYS = 2

TWO_TO_FOUR_DAYS = 3

FOUR_TO_FIVE_DAYS = 4

SIX_PLUS_DAYS = 5

@dataclass

class ObesityRecord:

sex: Sex

age_years: int

height_cm: float

overweight_obese_family: bool

consumption_of_fast_food: bool

frequency_of_vegetables: FrequencyLevel

number_of_main_meals: int

food_intake_between_meals: FrequencyLevel

smoking: bool

liquid_intake_daily: str

physical_exercise: ExerciseFrequency

technology_time: str

@classmethod

def from_row(cls, row):

return cls(

sex=Sex(row['Sex']),

age_years=row['Age'],

height_cm=row['Height'],

overweight_obese_family=row['Overweight_Obese_Family'] == 1,

consumption_of_fast_food=row['Consumption_of_Fast_Food'] == 1,

frequency_of_vegetables=FrequencyLevel(row['Frequency_of_Consuming_Vegetables']),

number_of_main_meals=row['Number_of_Main_Meals_Daily'],

food_intake_between_meals=FrequencyLevel(row['Food_Intake_Between_Meals']),

smoking=row['Smoking'] == 1,

liquid_intake_daily=data_dict['Liquid_Intake_Daily'][row['Liquid_Intake_Daily']],

physical_exercise=ExerciseFrequency(row['Physical_Exercise']),

technology_time=data_dict['Schedule_Dedicated_to_Technology'][row['Schedule_Dedicated_to_Technology']]

)

def transform_with_types(df):

records = []

for _, row in df.iterrows():

try:

record = ObesityRecord.from_row(row)

records.append(record)

except (ValueError, KeyError) as e:

print(f"Error processing row: {row}")

print(f"Error: {e}")

return records

TODO: RESULTS

Using the Type-Safe Implementation

# Read the dataset

df = pd.read_excel('Obesity_Dataset.xlsx')

# Transform using type-safe classes

records = transform_with_types(df)

# Example usage

first_record = records[0]

print(f"Sex: {first_record.sex.name}")

print(f"Exercise Frequency: {first_record.physical_exercise.name}")

print(f"Height: {first_record.height_cm} cm")

Benefits of Type-Safe Implementation

- Type Safety

- Catch errors at compile time

- Clear definition of valid values

- Self-documenting code

- Better IDE Support

- Autocomplete for valid values

- Type hints for development

- Easier refactoring

- Data Validation

- Automatic validation of inputs

- Clear error messages

- Consistent data structure

- Maintainability

- Single source of truth for data types

- Easier to update and modify

- Better code organization

Best Practices for Implementation

- Define Clear Types

- Use enums for categorical data

- Add proper type hints

- Document valid ranges

- Handle Errors Gracefully

- Implement proper error handling

- Log invalid data

- Provide meaningful error messages

- Document Everything

- Add docstrings to classes

- Document data transformations

- Keep data dictionary updated

The Impact on AI Projects

Using type-safe implementations delivers additional benefits:

- Reduced Errors

- Catch data issues early

- Prevent invalid states

- Better data quality

- Improved Development Speed

- Better IDE support

- Clearer code structure

- Easier debugging

- Better Collaboration

- Self-documenting code

- Clear data contracts

- Easier onboarding

Working Without a Data Dictionary

Often in real-world scenarios, you’ll encounter datasets without proper documentation. While this is not ideal, there are some systematic strategies you can use to make sense of the data.

1. Talk to Domain Experts

Your first step should be to talk to the domain experts who know the data best. They can provide valuable insights into the meaning of the data and help you understand the context in which it was collected. This can help you construct some assumptions and identify potential transformations to make to the data to make it more semantically meaningful and human readable. But what if that isn’t enough? Let’s discuss some other systematic steps.

2. Initial Data Analysis

First we can conduct some analysis on the columns to understand what the encodings might mean. A column might be numeric, but may only have a few unique values, indicating that it might actually be categorical! Also seeing the distribution of values might give us some hints as to what the units of the data are.

For example, this might be useful

def analyze_column_patterns(df, column_name):

"""Analyze patterns in a column to infer its meaning."""

unique_values = df[column_name].unique()

value_counts = df[column_name].value_counts()

print(f"\nAnalysis for {column_name}:")

print(f"Number of unique values: {len(unique_values)}")

print(f"Value distribution:\n{value_counts}")

print(f"Data type: {df[column_name].dtype}")

# Check if values are all integers or floats

numeric_values = pd.to_numeric(unique_values, errors='coerce')

all_numeric = not pd.isna(numeric_values).any()

if all_numeric:

print(f"Range: {min(unique_values)} to {max(unique_values)}")

TODO: RESULTS

3. Pattern Recognition Strategies

Beyond just looking at the distribution of values, we can systematically apply some heuristics to infer the type of the data in each column. Note: these are heuristics and not foolproof, but they can still be useful!

def infer_column_type(df, column_name):

"""Attempt to infer the type of data in a column."""

unique_values = df[column_name].unique()

value_count = len(unique_values)

# Binary values often indicate yes/no or true/false

if value_count == 2:

return "binary"

# Small number of unique values often indicates categorical

elif value_count <= 5:

return "categorical_small"

# Larger but limited set might be ordinal

elif value_count <= 10:

return "categorical_medium"

# Many unique values suggest continuous

else:

return "continuous"

def suggest_transformations(df):

"""Suggest transformations for each column."""

suggestions = {}

for column in df.columns:

col_type = infer_column_type(df, column)

if col_type == "binary":

suggestions[column] = {

"suggested_type": "boolean",

"transformation": "Map to True/False or Yes/No",

"example_values": df[column].unique().tolist()

}

elif col_type.startswith("categorical"):

suggestions[column] = {

"suggested_type": "categorical",

"transformation": "Create meaningful labels",

"example_values": df[column].value_counts().head().to_dict()

}

else:

suggestions[column] = {

"suggested_type": "continuous",

"transformation": "Consider units and scaling",

"range": [df[column].min(), df[column].max()]

}

return suggestions

TODO: RESULTS

3. Consulting Domain Experts

When working without a data dictionary, domain expertise becomes crucial. Here’s a structured approach to gathering expert input:

5. Practical Example

Here’s how to put it all together:

def analyze_unknown_dataset(df):

# Step 1: Initial analysis

print("Initial Analysis:")

for column in df.columns:

analyze_column_patterns(df, column)

# Step 2: Generate suggestions

suggestions = suggest_transformations(df)

# Step 3: Create validation sheet

validation_sheet = create_expert_validation_sheet(df, suggestions)

# Step 4: Build new data dictionary

builder = DataDictionaryBuilder()

# Example of documenting a column

builder.add_column(

"Physical_Exercise",

"ordinal",

"Frequency of physical exercise",

valid_values="Inferred from values 1-5"

)

builder.document_assumption(

"Physical_Exercise",

"Ascending values indicate increasing frequency"

)

return validation_sheet, builder.export_dictionary()

Best Practices When Working Without a Data Dictionary

- Lean on Domain Experts

- Get as much information from domain experts as possible

- Engage them early and often

- Document Everything

- Record all assumptions made

- Note any patterns observed

- Keep track of stakeholder input

- Validate Incrementally

- Start with obvious transformations

- Validate with domain experts

- Update documentation as you learn

- Build Robust Error Handling

- Account for unexpected values

- Log validation failures

- Create fallback options

- Monitor and Iterate

- Track transformation errors

- Update mappings as needed

- Refine assumptions over time

- Create Living Documentation

- Maintain version history

- Document change rationale

- Include example cases

Call to Action

- Audit Your Current Implementation

- Review current data handling

- Identify areas for type safety

- Document data structures

- Create Migration Plan

- Start with critical datasets

- Define type-safe classes

- Plan incremental updates

- Implement and Test

- Add type safety gradually

- Test thoroughly

- Monitor for improvements

Remember: Investing in proper data representation and type safety pays dividends throughout your AI project lifecycle.