Evaluating Multi-Turn AI Agents

A Systematic Approach to Building Reliable Agent Systems

AI is Fundamentally Empirical

We can’t predict what will work.

- LLMs are highly data-dependent

- Performance varies wildly across use cases

- We have priors (educated guesses)…

- …but we must experiment to find out

The Challenge: Understanding

Long-running agents are hard to evaluate:

- Cost: Running full eval suites gets expensive fast ($10-50 per test run)

- Latency: Multi-turn conversations take 30s-5min to execute

- Context Overload: Which of the 47 steps actually broke?

Why This Matters

If we can’t evaluate agents systematically:

- We can’t understand when/why they fail

- We can’t improve them reliably

- We can’t maintain them as requirements change

- We can’t ship them with confidence

The solution: Make evaluation straightforward through systematic decomposition.

What We’ll Cover Today

- The Framework: Evaluation pyramid and decomposition strategies

- Implementation: Code design, tracing, and system evaluation

- In Practice: Real results and research validation

Part 1: The Framework

Evaluation Pyramid & Decomposition

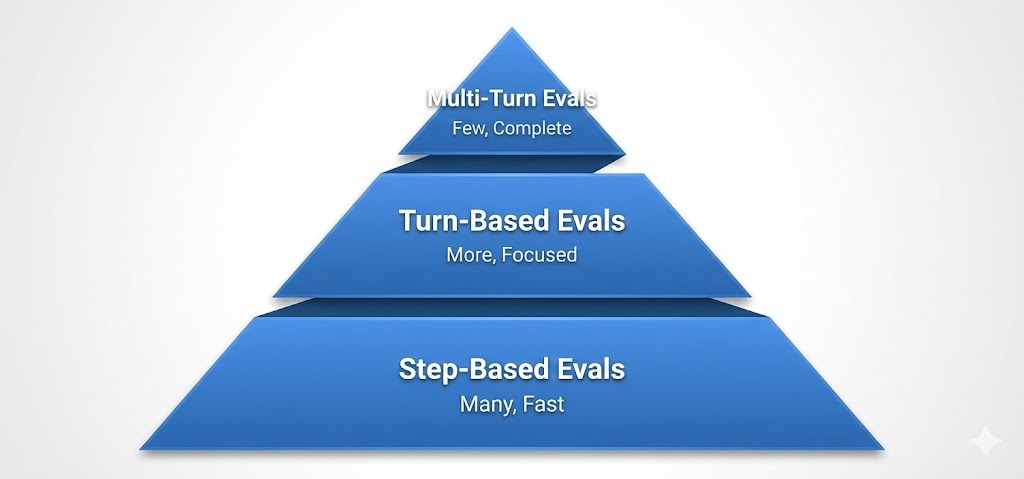

The Evaluation Pyramid

Not all evals are created equal:

Principle: Test narrow/fast at the bottom, broad/slow at the top

Step-Based Evals: The Foundation

Test individual components in isolation:

- Tool calls: “Did it call the right function?”

- Parameter extraction: “Did it parse user input correctly?”

- Domain logic: “Did it apply business rules?”

- Validation: “Did it catch invalid inputs?”

Why: Fast feedback (<1s), easy to debug, cheap (<$0.01)

Step-Based Eval: Code Example

Fast, deterministic, easy to debug

Turn-Based Evals: The Middle Layer

Test single-turn interactions:

- User message → Agent response

- Check partial ordering of tool calls

- “Read must happen before write”

- “Verify identity before granting access”

- Validate tool parameters and response quality

Why: Catches integration issues, still relatively fast (~5-10s per test)

Turn-Based Eval: Code Example

def test_handle_refund_request():

"""Test agent correctly handles refund request"""

conversation = Conversation()

response = agent.handle_turn(

conversation,

user_msg="I need a refund for order #12345"

)

# Check it called the right tool

assert response.tool_calls[0].name == "lookup_order"

assert response.tool_calls[0].args["order_id"] == "12345"

# Check response quality

assert "refund" in response.message.lower()

assert response.needs_user_confirmation == TrueMulti-Turn Evals: The Challenge

Test complete conversations:

- Full user journey from start to finish

- Simulate the user - respond to agent naturally

- Multiple turns of interaction

- Context accumulation across turns

- Did the agent achieve the user’s goal?

Problem: Expensive ($1-5 per test), slow (30s-5min), hard to debug

Solution: Break into milestones

Milestones: Decomposing Multi-Turn

Instead of: Did the conversation succeed? ✅/❌

Ask: What are the critical checkpoints for this user flow?

- Intent captured?

- Necessary info gathered?

- Tool executed successfully?

- Policy compliance checked?

- Response delivered clearly?

Key insight: Milestones are user-flow specific - different flows need different checkpoints

Milestone Example: Password Reset

Flow: User can’t log in → Agent resets password

- Intent: Understood password issue

- Identity: Confirmed user identity

- Diagnosis: Forgot vs locked account

- Remedy: Choose reset link vs unlock

- Execute: Send reset successfully

- Confirm: User can proceed

Each milestone is a gradable checkpoint

Password reset journey with milestones

Milestone-Based Evaluation: Code

def evaluate_password_reset(trace):

"""Analyze trace to check milestones"""

milestones = {

"intent": check_intent_type(trace, expected="password_reset"),

"identity": check_verified(trace, "verify_user_identity"),

"diagnosis": check_tool_result(trace, "check_account_status",

has_field="account_locked"),

"remedy": check_tool_called(trace, "send_reset_link",

with_param="user_id"),

"execute": check_attribute(trace, "reset.sent", equals=True),

"confirm": check_final_state(trace, "user_can_login")

}

return MilestoneReport(milestones=milestones,

first_failure=find_first_failure(milestones))Failure Funnels: Visualizing Drop-Off

Track milestone completion rates across many runs:

Biggest drop = highest leverage fix

Failure Funnel: Real Example

Password Reset Flow - Initial deployment:

The funnel showed 28% drop at remedy selection

- Problem: Agent chose “unlock account” when should send reset link

- Root cause: Ambiguous phrasing in account status tool response

- Fix: Clarified tool output format + added examples

- Result: 42% → 70% overall success rate

Don’t fix everything - fix the biggest leak first

Part 2: Implementation

Code Design, Tracing, & System Evaluation

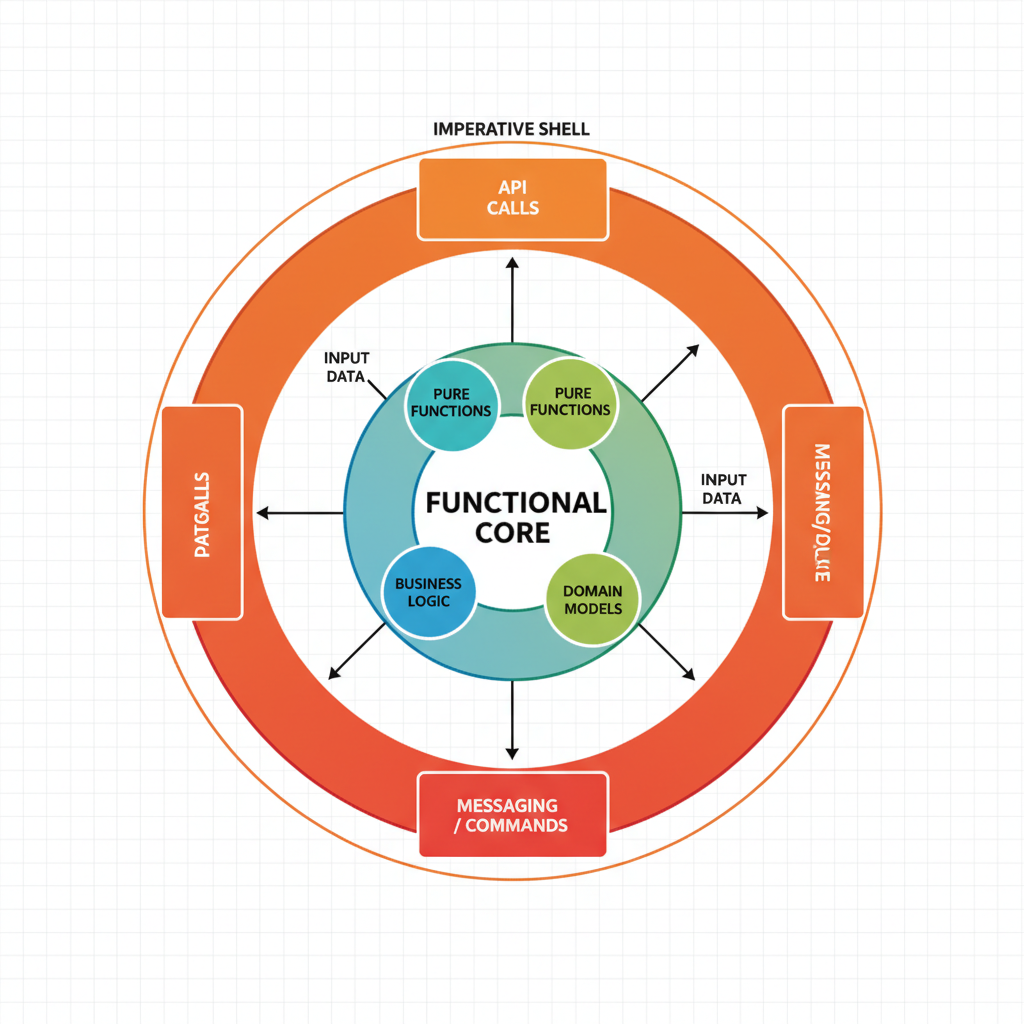

Code Design for Testability

Functional Core, Imperative Shell

Functional Core

- Pure functions

- No I/O or LLM calls

- Deterministic

- Easy to test

Imperative Shell

- API calls

- Database writes

- LLM invocations

- Thin layer

Result: 80% of your code is fast to test with step-based evals

Before: Tangled Code

async def handle_refund(user_message: str):

"""Everything tangled together - hard to test"""

# LLM call embedded in business logic

response = await llm.complete(

f"Extract order ID from: {user_message}"

)

order_id = response.text.strip()

# Database call embedded

order = await db.get_order(order_id)

# Business logic mixed with I/O

if order.amount > 100:

await db.create_refund(order_id, order.amount)

return "Refund processed"

else:

return "No refund needed"Problem: Can’t test business logic without LLM/DB

After: Functional Core + Imperative Shell

# CORE: Pure function, easy to test

def should_process_refund(order: Order) -> RefundDecision:

"""Business logic - no I/O"""

if order.amount > 100:

return RefundDecision(

approve=True,

amount=order.amount,

reason="Amount exceeds threshold"

)

return RefundDecision(approve=False, reason="Below threshold")

# SHELL: Thin layer for I/O

async def handle_refund(user_message: str):

"""Orchestration - delegates to core"""

order_id = await extract_order_id(user_message) # LLM

order = await db.get_order(order_id) # DB

decision = should_process_refund(order) # CORE - pure!

if decision.approve:

await db.create_refund(order_id, decision.amount) # DB

return format_response(decision) # Pure formatting

Functional core and imperative shell architecture

Testing the Functional Core

def test_refund_decision_above_threshold():

"""Fast, deterministic, no mocks"""

order = Order(id="123", amount=150)

decision = should_process_refund(order)

assert decision.approve == True

assert decision.amount == 150

assert "threshold" in decision.reason

def test_refund_decision_below_threshold():

order = Order(id="456", amount=50)

decision = should_process_refund(order)

assert decision.approve == FalseRuns in milliseconds. No LLM. No database. No mocks.

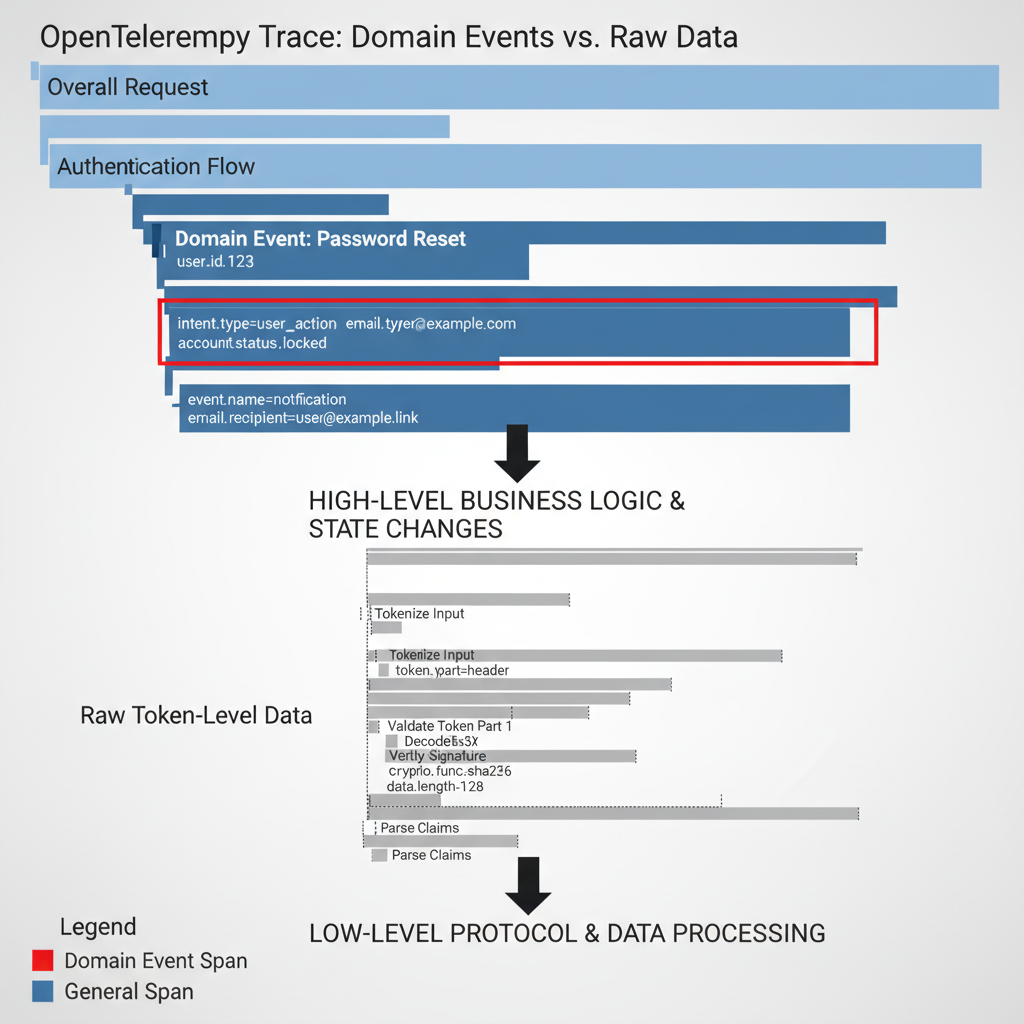

Tracing, Not Just Logging

Don’t log tokens. Trace domain events.

- Traces capture structure of execution

- Domain events are stable across model changes

- Traces enable milestone evaluation

- Traces support distributed debugging

Bad: Token Logging

Problem: - Changes every time you update the model - Hard to search/query - No structure for evaluation

Good: Domain Event Tracing

from opentelemetry import trace

tracer = trace.get_tracer(__name__)

async def classify_intent(request: UserRequest) -> Intent:

with tracer.start_as_current_span("classify_intent") as span:

# Trace input (domain model)

span.set_attribute("request.user_id", request.user_id)

span.set_attribute("request.session_id", request.session_id)

# Make LLM call

response = await llm.complete(f"Classify: {request.message}")

# Parse to domain model

intent = parse_intent(response.text)

# Trace output (domain model)

span.set_attribute("intent.type", intent.type)

span.set_attribute("intent.confidence", intent.confidence)

span.set_attribute("intent.entities", intent.entities)

return intent

Trace structure with domain events vs raw tokens

Trace-Based Milestone Evaluation

def evaluate_milestone_from_trace(trace):

"""Check if intent recognition milestone was achieved"""

intent_span = trace.get_span_by_name("classify_intent")

if not intent_span:

return False # Milestone not reached

# Check domain attributes

intent_type = intent_span.get_attribute("intent.type")

confidence = intent_span.get_attribute("intent.confidence")

# Milestone achieved if intent was classified with high confidence

return intent_type is not None and confidence > 0.7Traces make milestones queryable

Evaluate the System, Not the API

Prompt playgrounds miss the mark

They test:

- “Did the LLM generate good text?”

You need to test:

- “Did the SYSTEM solve the user’s problem?”

The system includes: prompts + tools + business logic + error handling + retry logic + validation

Part 3: In Practice

Real Results & Research Validation

Research Backing This Approach

Recent work supports layered evaluation:

- AgentBoard (2024): Fine-grained progress metrics, step-level analysis

- MT-Eval (2024): Multi-turn interaction patterns (recollection, expansion, refinement, follow-up)

- Survey on LLM Agent Evaluation (2025): 250 sources, emphasizes complementary granular + holistic metrics

- LangSmith Multi-turn Evals (2024): Industry validation of milestone-based approach

The field is converging on multi-level evaluation strategies

Summary: The Complete System

- Embrace experimentation - AI is empirical, iterate fast

- Test at multiple levels - Step → Turn → Multi-turn pyramid

- Break into milestones - Make multi-turn conversations gradable

- Use failure funnels - Find and fix the biggest leaks

- Design for testability - Functional core, imperative shell

- Trace domain events - Stable foundation for evals

- Evaluate the system - Not just the LLM

Start small: Pick one capability, build one pyramid

Resources

Blog post:

- https://skylarbpayne.com/posts/multi-turn-eval/

Research Papers:

Questions?

Contact me: me@skylarbpayne.com