graph TB

subgraph "Host Applications"

A[Claude Desktop]

B[VS Code + Cursor]

C[Your AI App]

end

subgraph "MCP Clients"

D[Client 1]

E[Client 2]

F[Client 3]

end

subgraph "MCP Servers"

G[GitHub Server]

H[Database Server]

I[File System Server]

J[Your Custom Server]

end

A --> D

B --> E

C --> F

D --> G

E --> H

F --> I

F --> J

Everything You Always Wanted to Know About MCP

But Were Afraid to Ask

2025-07-24

About Me

Empowering you to build AI products users love.

And permanently ditch those 3 AM debugging sessions.

- Building AI products for over a decade at Google, LinkedIn, and in AI based diagnostics (healthcare)

- Trained over 100 engineers to build AI products

- Hands on executive experience building the teams that build AI products

Fun fact: my favorite movie is Mean Girls (I have a party for it every October 3rd).

So… What is MCP, really?

The USB-C for AI

Remember the cable chaos before USB-C?

Before USB-C

- Different cables for every device

- Proprietary connectors everywhere

- Vendor lock-in and incompatibility

- Consumer frustration

After USB-C

- Universal connector for all devices

- One cable to rule them all

- Interoperability across vendors

- Innovation acceleration

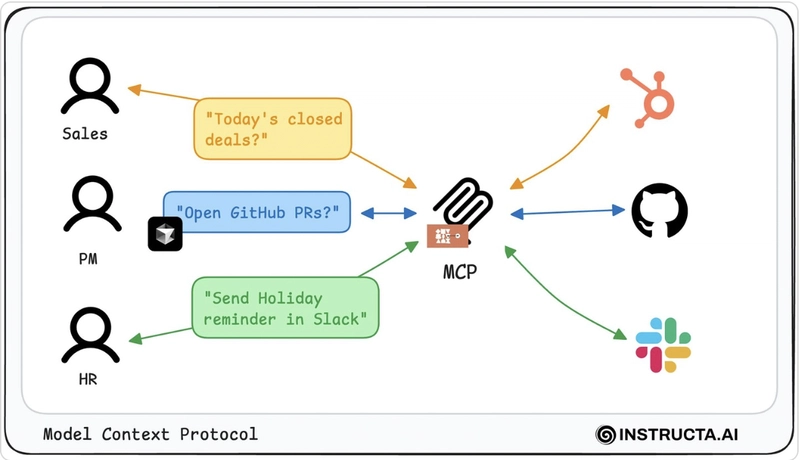

The AI Integration Problem

Today’s Reality

- Every AI app builds custom integrations

- Fragmented, brittle connections

- Developers reinvent the wheel

The MCP Vision

- Standard protocol for AI integrations

- Build once, use everywhere

- Composable ecosystem

Result: Focus on value, not plumbing

MCP Architecture

One server works with any MCP-compatible host!

Three Core Primitives

| Primitive | Purpose | Example |

|---|---|---|

| Resources | Read-only data | ArXiv paper abstracts |

| Tools | Actions with side effects | Create GitHub Gist |

| Prompts | Workflow templates | Research assistant workflow |

Simple but powerful: Everything an AI agent needs

Demo: Research Assistant

What It Does

- Search ArXiv for papers

- Analyze abstracts and content

- Synthesize research findings

- Create structured GitHub Gist

MCP Components

- ArXiv MCP Server (external tool)

- GitHub Gist Server (custom)

- Mirascope Client (host)

- Server composition with FastMCP

Demo time! Let’s see it in action

Step 1: Server Composition

From demo/server.py:

from fastmcp import FastMCP

from gist_mcp import mcp as gist_mcp

import uvicorn

# Proxy to external ArXiv MCP server

arxiv_mcp = FastMCP.as_proxy({

"mcpServers": {

"arxiv-mcp-server": {

"command": "uv",

"args": [

"tool", "run", "arxiv-mcp-server",

"--storage-path", ".tmp/arxiv-mcp-server"

]

}

}

})

# Main server combining capabilities

mcp = FastMCP("My MCP Server")

mcp.mount(arxiv_mcp) # ArXiv search

mcp.mount(gist_mcp) # Gist creation

if __name__ == "__main__":

uvicorn.run(mcp.sse_app(), host="0.0.0.0", port=8000)Step 2: Custom Gist Server

From demo/gist_mcp.py:

import httpx

import os

from fastmcp import FastMCP

GITHUB_TOKEN = os.environ.get("GITHUB_PERSONAL_ACCESS_TOKEN")

mcp = FastMCP("Gist Creator")

@mcp.tool(

name="create_gist",

description="Create a GitHub Gist using the configured personal access token.",

)

async def create_github_gist(title: str, body: str, description: str = "", public: bool = True):

url = "https://api.github.com/gists"

headers = {"Authorization": f"token {GITHUB_TOKEN}"}

payload = {

"description": description,

"public": public,

"files": {title: {"content": body}}

}

async with httpx.AsyncClient() as client:

response = await client.post(url, json=payload, headers=headers)

response.raise_for_status()

return response.json()Step 3: AI Agent Client

From demo/client.py:

from mirascope import llm, prompt_template

from mirascope.mcp import sse_client

@llm.call(provider="openai", model="gpt-4.1-mini")

@prompt_template("""

SYSTEM: You are a helpful research assistant.

You use arxiv search tools to search for papers to answer the user's question.

You always write your findings in a structured format to a github gist when you are done.

Remember: you MUST create a github gist.

USER: {query}

MESSAGES: {history}

""")

async def mini_research(query: str, *, history=None): ...

async def main():

async with sse_client("http://localhost:8000/sse") as client:

tools = await client.list_tools()

resp = await run(query, tools=tools)Step 4: Agent Loop

Key parts from demo/client.py:

async def _process_tools(resp):

"""Process tool calls asynchronously for efficiency."""

if tools := resp.tools:

for t in tools: print('Calling', t._name())

tasks = [t.call() for t in tools]

tool_results = await asyncio.gather(*tasks)

return list(zip(tools, tool_results))

return None

async def run(query: str, *, tools, max_steps: int = 10):

"""Main agent loop: continue until done or max steps reached."""

done, history, i = False, [], 0

while not done and i < max_steps:

resp, history, done = await _one_step(query, tools=tools, history=history)

i += 1

return respRunning the Demo

Simple setup:

Try it: “Research recent advances in transformer architecture optimization”

MCP Transports

STDIO Transport

- Local processes

- Direct process communication

- Great for tools, scripts, local files

HTTP Transport

- Remote services

- Scalable web services

- Enterprise deployments

SSE (Server-Sent Events): Deprecated but still used by some host applications like Mirascope

Security Best Practices

Environment Variables

- Never hardcode secrets

- Use

.envfiles for development - Proper secret management in production

User Consent

- Show users what actions will be taken

- Clear tool descriptions

- Explicit permission flows

Coming Soon: Support for OAuth flows for enterprise deployment support

Advanced MCP Features

- Sampling: Servers can request LLM calls from hosts

- Progress Notifications: Real-time updates for long operations

- Elicitation: Request more information from host application

- Resource Templates: Dynamic resource paths with parameters

- Context Objects: Rich integration with host capabilities

- Authorization: Enterprise OAuth flows

These unlock sophisticated multi-agent workflows

Why not just tools?

You might be thinking: why do we need to go through all this trouble? Why not just use simple cli tools?

Or maybe… Should I always use MCP?

It Depends.

- Standardized interfaces (like MCP) provide leverage: common logging, auth, metrics, etc.

- CLI tools often work great in many cases too!

In short: use MCP servers off the shelf when it makes sense. Also use off the shelf simple tools when it makes sense.

When you have need for standardized control (for logging, metrics, auth) etc, you might want to force all MCP use

Extending Our Demo

Add PDF Processing

- Download full papers

- Extract and summarize text

- Include detailed analysis in gist

Web Search Integration

- Find related discussions

- Check for implementations

- Add broader context

Private Knowledge

- Search Obsidian notes

- Access company research

- Connect to internal databases

Multi-Modal Analysis

- Process paper figures

- Analyze charts and graphs

- Generate visual summaries

The Composable Future

graph LR

A[ArXiv Server] --> D[Research Agent]

B[PDF Server] --> D

C[Gist Server] --> D

E[Web Search] --> D

F[Obsidian] --> D

D --> G[Comprehensive Research Reports]

Mix and match servers to create powerful, specialized agents

Questions & Discussion

Thank you!

- Demo Code: This repository

/demofolder - MCP Docs: modelcontextprotocol.io

- FastMCP: High-level Python development of MCP clients/servers

- MCP Github: base implementations of MCP protocol in multiple languages