Evaluate your AI Maturity today with a free assessment! Click Here to Get Started

Your competitor just announced their “AI-powered revolution.” The board is demanding updates on AI strategy. Your team rushed to deploy an AI chatbot for customer support, but now users are posting screenshots of it giving nonsensical answers and leaking sensitive information. You’re lying awake at night, watching each new AI advancement with growing anxiety, wondering: Are we falling behind? Are we building the right things? And most crucially - where’s the actual value?

The pressure is intense, especially for B2B SaaS companies and startups where every customer matters and churn is costly. Every week brings news of another breakthrough, another startup’s AI feature, another competitor’s bold claims. Your current implementation isn’t delivering clear ROI, but the path to meaningful value feels overwhelmingly complex and expensive. The board wants to see concrete results, not just “AI features.” Your team is struggling to explain why their AI investments aren’t generating more impact. And with each passing quarter, the stakes get higher as AI capabilities accelerate and market expectations grow.

This is the critical juncture where most AI initiatives crumble. Some companies resign themselves to maintaining basic implementations that barely justify their cost - like chatbots that frustrate more customers than they help. Others panic and throw money at expensive ML specialists, hoping expertise alone will somehow translate into value. Both paths lead to the same outcome: AI becomes a liability rather than an asset.

After leading many AI solutions at Google, Linkedin, and startups, and now guiding dozens of companies through their AI implementations, I’ve seen this pattern play out repeatedly. But I’ve also learned something crucial: The difference between companies that merely use AI and those that build lasting advantage isn’t raw technical capability. It’s about methodically building the right capabilities through technology and operations to transform your AI implementation from a collection of features into an strategic asset that compounds in value over time.

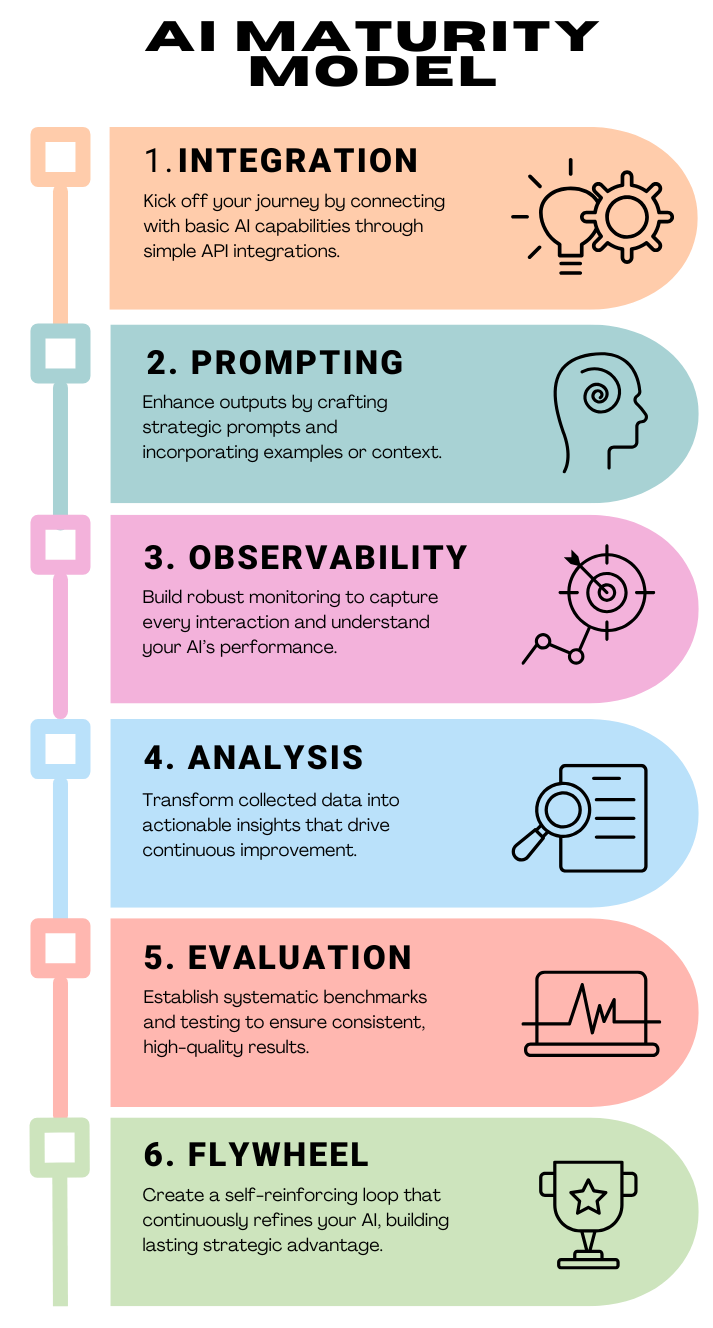

In this guide, I’ll share a detailed maturity model that maps the journey from anxiety-inducing uncertainty to strategic confidence. You’ll learn why most companies get stuck in the early stages of AI maturity, what specific capabilities you need to advance, and how to build systems that actually get more valuable over time. Most importantly, you’ll understand how to make smart investments that move you steadily up the maturity curve without betting the company on risky all-or-nothing plays.

Level 1: Integration

Everyone starts here. You’ve seen the headlines about AI transforming industries, watched competitors launch AI features, and finally decided it’s time to dive in. The good news? This first step is simpler than you might think.

At this stage, you’re essentially building your first bridge to AI capabilities. It’s straightforward: your application makes an API call, and like magic, AI-generated text appears. The architecture couldn’t be simpler - user input goes in, AI response comes out. You’ve probably got some basic error handling (because production systems can’t just crash), and maybe you’re storing API keys securely, but that’s about it.

- Simple API Calls: The system makes straightforward requests to the AI provider, ensuring that the basic functionality is operational.

- Basic Error Handling: Minimal measures are put in place to handle issues such as timeouts or incorrect responses, ensuring that errors do not completely disrupt the user experience.

- Minimal Context Management: Interactions are often limited to immediate, one-off transactions with little retention or understanding of broader context.

This entry-level stage is accessible to most organizations for several reasons:

- Well-Documented APIs: Many AI providers offer clear, comprehensive documentation, making it easier for developers to integrate their services.

- Numerous Examples Available: A wealth of online tutorials, code samples, and community support reduce the barrier to entry.

- Clear Implementation Patterns: The typical request-response pattern is straightforward, reducing the need for complex architectural changes.

- Low Technical Barrier to Entry: Even teams with modest technical expertise can successfully implement these basic integrations, making it an ideal starting point for AI adoption.

At this point, you might be feeling pretty good. Your first AI feature is live! But you’re probably also noticing some limitations. Responses are inconsistent, sometimes off-target, and you have little control over the output quality. That nagging feeling that it’s not good enough? That’s what leads to Level 2.

Level 2: Prompting

Now you’ve moved past the “hello world” stage of AI implementation, and now you’re starting to see both the true potential and the real challenges.

At this level, you’re no longer just asking the AI for answers - you’re teaching it how to think. Your prompts become sophisticated instructions, complete with carefully chosen examples that guide the AI toward the kinds of responses you need. You’re also starting to bring in your own data through broader systems like RAG, because you’ve realized that even the smartest AI needs context about your specific domain.

What you’re building looks more complex:

- Few-shot Prompting with Examples: By providing the AI with carefully curated examples, the system can generate more accurate and context-aware responses.

- Dynamic Context: The system integrates external knowledge sources on the fly, enriching the input context and enhancing the AI’s output. For example, using retrieval augmented generation (RAG).

- Structured Input/Output: There is a move towards a more organized and predictable data flow, where inputs and outputs follow a structured format.

- Basic Output Validation: Initial checks are implemented to ensure that the AI’s responses meet certain quality and relevance criteria.

Reaching this stage requires a deeper understanding of both the AI model’s capabilities and the specific needs of the application domain:

- Deeper Understanding of LLM Capabilities: Organizations must invest time in understanding how large language models (LLMs) work and how best to harness their potential.

- Domain Expertise for Crafting Examples: Tailoring prompts effectively demands knowledge of the specific domain, requiring collaboration between technical and subject matter experts.

- Technical Complexity of RAG Implementation: Integrating dynamic context through RAG involves more sophisticated technical setups and data management strategies.

- Effort to Maintain and Update Contexts: As applications evolve, so must the examples and contextual data, necessitating ongoing review and maintenance.

The good news? Your responses are more accurate, more reliable, and more aligned with your business needs. The bad news? You’re probably starting to realize how much you don’t know about what’s happening inside your system. When a response is bad, you can never really understand why. Every change feels like a shot in the dark. That’s what leads to Level 3.

Level 3: Observability

At this level, the focus shifts from simply using the AI to rigorously monitoring and measuring its performance. Instrumentation is about embedding a robust set of logging and tracking mechanisms to gain visibility into every interaction with the AI system.

- Logging of All Interactions: Every request and response is recorded, allowing for detailed analysis of how the system is used and where issues may arise.

- Tracking Context Sources: Keeping track of the origins of data and context helps in understanding the influence of various inputs on the AI’s output.

- Performance Metrics: Key performance indicators (KPIs) such as response times, accuracy, and throughput are systematically monitored.

- Error Tracking: A dedicated focus on logging errors helps in identifying recurring issues and facilitates timely troubleshooting.

- Cost Monitoring: As usage scales, monitoring the costs associated with AI calls becomes crucial for managing budgets.

- Latency Measurements: Understanding delays in the system allows teams to optimize both the user experience and the underlying infrastructure.

Instrumentation introduces a new layer of complexity and requires a coordinated effort across the organization:

- Upfront Planning Required: You cannot “retroactively” log. Once you haven’t logged for a set of queries, you cannot create those logs later.

- Additional Infrastructure Needed: Establishing these capabilities often requires new tools and systems for data collection and analysis.

- Engineering Time Investment: Significant engineering resources must be allocated to design, implement, and maintain the instrumentation framework.

- Cross-Cutting Concern: Because logging and monitoring affect many parts of the system, integrating them seamlessly can be challenging.

Through comprehensive instrumentation, organizations gain a clear picture of their AI system’s operational health, paving the way for data-driven optimizations and informed decision-making. But then you find your next challenge: the leverage from this wealth of data is low because people rarely look at the data! This leads us to Level 4.

Level 4: Analysis

Remember all that data you started collecting in Level 3? Now it’s time to make it talk. This is where organizations transition from “we have logs” to “we have insights.”

The challenge isn’t collecting data anymore - you’re drowning in it. The real challenge is turning that flood of information into actionable insights. It’s the difference between knowing that your AI system had a 73% success rate last week and understanding exactly why it wasn’t 93%.

- Regular Review of Logs: Systematic analysis of interaction logs helps to uncover trends, anomalies, and recurring issues.

- Error Analysis: Detailed examinations of error logs enable teams to identify root causes and implement long-term fixes.

- Performance Optimization: Continuous monitoring and analysis drive iterative improvements in system responsiveness and accuracy.

- Cost Optimization: By understanding usage patterns and inefficiencies, organizations can adjust their strategies to manage costs more effectively.

- Understanding User Patterns: Analyzing user interactions provides insights into behavior and preferences, informing future enhancements.

- Identifying Improvement Opportunities: Data analysis can reveal areas for further automation or refinement, driving the system’s evolution.

- Annotating and Enriching Data: by commenting and labeling the data, you can discover important subgroups in data and improve your understanding of the domain to inform decisions.

But teams will struggle with the cultural change required:

- Dedicated Analysis Time: Teams must allocate regular time for in-depth review and analysis of the collected data.

- Need for Data Literacy: A successful transition to data-driven decision-making requires that team members are skilled in interpreting complex datasets.

- Often No Dedicated Ownership: Without clear responsibility, the analysis and subsequent improvements can fall by the wayside amid competing priorities.

- Competing Priorities: Organizations often struggle to balance immediate operational needs with long-term strategic analysis.

Those that succeed? They’ve usually learned the hard way that “we’ll look at the data when we have time” means “we’ll never look at the data.” They make analysis a priority, not an afterthought.

The reward is worth it though. For the first time, you’re not just operating your AI system - you’re truly understanding it. But as you see how much time is invested into adding the same comments, labels, annotations, etc you will find diminishing returns from the investment because most of your time is spent on the same classes of queries or errors. This is when you start reaching for level 5 maturity.

Level 5: Evaluation

At Level 4, you learned to extract insights from your data. Now it’s time to systematize that knowledge. This is where you stop relying on spot checks and “it looks good to me” and start building true quality assurance for your AI system.

You’re not just checking quality in an adhoc way anymore - you’re defining what quality means for AI, measuring it consistently, and ensuring it stays high. It’s a transition that separates serious AI implementations from experiments.

What you’re building at this level:

- Defined Evaluation Criteria: Clear, measurable benchmarks are set to assess the performance and accuracy of the AI system.

- Test Suites: Comprehensive test cases are developed to simulate a wide range of scenarios, ensuring robust performance.

- Regular Evaluation Runs: The system undergoes periodic testing to track performance over time and catch any deviations early.

- Performance Tracking Over Time: Long-term trends are monitored, enabling proactive adjustments to maintain or improve quality.

- Automated Testing: Where possible, automation is used to streamline the evaluation process, reducing manual intervention.

- Regression Detection: Automated systems help identify when new changes inadvertently degrade performance, ensuring swift corrective action.

But here’s the part that humbles even experienced teams: defining “good” for AI is incredibly hard. It’s not like traditional software where a function either works or doesn’t. You’ll face challenges like:

- Complexity in Defining Good Metrics: Developing criteria that accurately capture the nuances of AI performance is not straightforward.

- Effort to Create Test Cases: Crafting comprehensive test suites requires deep technical and domain expertise.

- Infrastructure Needed: Continuous evaluation demands dedicated infrastructure for automated testing and performance tracking.

- Ongoing Maintenance Required: As the system evolves, test cases and evaluation criteria must be regularly updated.

- Need for Both ML and Domain Expertise: A successful evaluation framework relies on insights from both technical and industry-specific perspectives.

Most organizations get stuck here because they underestimate how difficult true quality assurance is for AI. Those that succeed? They’ve usually learned that “we’ll know good quality when we see it” isn’t a strategy - it’s a hope.

The payoff is transformative though. For the first time, you can confidently say how well your AI is performing and know immediately if something goes wrong. Eventually, you’ll reach a point where your eval seems… saturated. Your team has tried several ideas but nothing moves the needle on the eval. That leads you to reach for Level 6 maturity.

Level 6: Flywheel

At the pinnacle of AI maturity, organizations create a continuously-improving system through the establishment of a data flywheel. This stage is marked by the ability to continuously collect, analyze, and integrate feedback into the AI model, driving perpetual enhancement and competitive differentiation — where all activities are guided by your eval.

- Automated Feedback Collection: Mechanisms are put in place to capture user interactions and outcomes, feeding valuable data back into the system.

- Strategic Data Acquisition: Proactively acquiring the right kind of data—whether through partnerships, public sources, or synthetic data—ensures a steady stream of quality input.

- Continuous Model Improvement: The system is designed to learn from new data, iteratively refining its models to improve accuracy and relevance.

- Dataset Curation: Data is not only collected but also organized and refined, ensuring that only high-quality, relevant information informs model updates.

- Fine-Tuning Capabilities: With curated datasets, the system can be fine-tuned to address specific business challenges and emerging trends.

- Creating Proprietary Advantage: Over time, the accumulation of unique data and insights forms a competitive moat that is difficult for competitors to replicate.

Achieving a data flywheel represents the convergence of technical excellence, strategic vision, and long-term investment:

- Requires All Previous Levels: Each of the earlier maturity levels must have decent investment before a data flywheel can be effective.

- Complex Infrastructure: The necessary systems for automated data collection, analysis, and model updating are inherently more complex.

- Need for Sophisticated ML Capabilities: Only organizations with deep expertise in machine learning can implement the advanced techniques required.

- Long-Term Investment: Building and maintaining a data flywheel is a long-term strategic commitment, not a quick fix.

- Strategic Planning: A forward-thinking approach is essential, as the benefits compound over time and require careful orchestration across multiple functions.

By reaching Level 6, companies transition from simply having AI features to leveraging it as a dynamic, continuously-improving strategic asset that fuels innovation and drives sustained competitive advantage.

Strategic Value Creation

With Level 6 in place, your organization isn’t just operating an AI system—it’s building a long-term strategic asset. While basic API integration can automate tasks, a mature, continuously-improving AI system creates value in three primary ways:

- Proprietary Data Assets:

- Unique Datasets: Over time, the organization accumulates data that is specific to its operations and customer interactions.

- Curated Examples: These datasets include refined examples that are tailored to the company’s domain.

- User Interaction Patterns: Insights derived from customer interactions provide a competitive edge.

- Domain-Specific Knowledge: The collected data reflects deep expertise and understanding of the industry, making it difficult for competitors to match.

- Continuous Improvement:

- System Gets Better Over Time: With a self-reinforcing feedback loop, the AI system continuously learns and evolves.

- Increasing Returns to Scale: As the data flywheel spins, the system’s performance improves, driving even greater returns.

- Network Effects Possible: The value of the system grows as more data is collected, attracting further usage and improvement.

- Competitive Moat:

- Hard to Replicate: The proprietary nature of the data and the complexity of the integrated system create barriers to entry for competitors.

- Compounds Over Time: The benefits of continuous improvement and unique data assets become more pronounced, further entrenching the organization’s competitive position.

- Domain-Specific Advantage: Tailored insights and specialized knowledge offer advantages that generic solutions cannot match.

AI can be an expensive investment; but by focusing on strategic value creation, organizations not only improve operational efficiency but also build lasting competitive advantages that are integral to long-term success.

An Implementation Guideline for Advancing AI Maturity

I know you’re wondering, where do I begin? Let me walk you through a step-by-step guide.

1. Begin with a Clear Self-Assessment

Evaluate Your Starting Point

Use the maturity model as a diagnostic tool. Identify where your organization stands in terms of basic API integration, prompt engineering, instrumentation, data analysis, evaluation, and continuous improvement.

Gather Cross-Functional Input

Engage stakeholders from IT, product, operations, and business units to provide a holistic view of current capabilities. This ensures that both technical feasibility and business impact are considered.

Document Existing Processes

Record how current AI integrations work, the systems used for error handling, and any data logging or monitoring already in place. This documentation will serve as your baseline.

2. Craft a Strategic Roadmap

Set Clear Milestones

Define specific, measurable goals for each maturity level. For instance, move from basic error handling (Level 1) to implementing dynamic prompt examples (Level 2) within a set timeframe.

Prioritize Improvements

Identify which levels will deliver the most value for your organization in the short term. While a fully matured data flywheel is ideal, starting with robust instrumentation (Level 3) can unlock immediate benefits in troubleshooting and performance optimization.

Align with Business Objectives

Ensure that every step on your roadmap is tied to a tangible business outcome—whether it’s improving customer experience, reducing costs, or enabling more sophisticated product features.

3. Invest in Talent and Partnerships

Build a Cross-Disciplinary Team

Assemble a team that includes engineers, ML experts, domain specialists, and product managers. Each level of maturity requires a blend of technical expertise and industry insight.

Leverage External Expertise

If certain skills are lacking internally, consider engaging with consultants, partnering with academic institutions, or using vendor solutions. This can accelerate learning, particularly in more advanced areas like RAG implementation and continuous model improvement.

4. Prioritize Robust Instrumentation Early

Embed Logging and Monitoring

As soon as you start integrating AI, invest in comprehensive logging, performance tracking, and cost monitoring. These capabilities are essential not only for debugging but also for informing later stages of data analysis and evaluation.

Choose Scalable Tools

Opt for instrumentation solutions that can grow with your system. Early decisions here will influence how easily you can expand into detailed performance analytics and continuous improvement.

5. Foster a Culture of Data-Driven Improvement

Schedule Regular Reviews:

Make data analysis a routine part of your operational process. Regularly review logs, evaluate error patterns, and adjust strategies based on what the data reveals.

Assign Ownership

Designate clear roles or teams responsible for monitoring AI performance and driving enhancements. Without clear ownership, valuable insights can be overlooked amid daily operational pressures.

Encourage Experimentation

Promote a culture where teams feel empowered to test new ideas and iterate on prompt engineering, evaluation frameworks, or feedback mechanisms. Learning from failures is as important as celebrating successes.

6. Establish Formal Evaluation Processes

Develop Comprehensive Test Suites

Create test cases that simulate a range of real-world scenarios. This not only safeguards against regressions but also builds confidence in your AI system’s performance. Start with easy tests like responses that may be too short or too long and evolve as you learn more about common failure modes.

Develop Domain-Specific Benchmarks Use your continuously improving domain understanding to guide the development of benchmarks that clearly measure the AI system’s performance on the task(s) you care about. You can ignore generalized benchmarks for the most part.

Define Success Metrics

Establish clear evaluation criteria that reflect both technical performance (e.g., response time, accuracy) and business outcomes (e.g., customer satisfaction, cost efficiency).

Automate Where Possible

Implement automated testing and evaluation pipelines to ensure that performance is continuously monitored and maintained. Automation reduces manual overhead and increases reliability.

7. Plan for Long-Term Investment and Scalability

Understand the Commitment

Transitioning to a fully self-improving data flywheel (Level 6) is a long-term journey that builds on every previous stage. Be prepared for ongoing investment in infrastructure, talent, and strategic planning.

Design with Flexibility

Create systems that can scale and evolve. Avoid rigid architectures that could hinder future growth or make it difficult to integrate new data sources, learning mechanisms, or model providers. The space changes quickly, and you want infrastructure that helps you keep pace.

Anticipate Future Needs

Look ahead to emerging trends and consider how your AI system might need to adapt over time. Strategic planning now can mitigate the risk of costly rework later.

8. Monitor Progress and Communicate Success

Regularly Reassess Maturity Levels

Periodically use the maturity model to gauge your progress. Adjust your roadmap based on these assessments to ensure that you’re moving steadily toward strategic asset creation.

Celebrate Milestones

Recognize and reward progress at each stage. Celebrating small wins boosts morale and encourages further innovation.

Share Insights Internally

Communicate successes and lessons learned across the organization. A transparent approach helps build support for AI initiatives and aligns teams around a common vision.

You may still be stuck on the questions: are we building the right thing? Where is the actual value? But by following these guidelines, your organization can avoid common pitfalls and steadily progress your AI investment from basic integrations to a strategic asset that compounds in value over time. The journey may be challenging, but with careful planning, dedicated resources, and a focus on continuous improvement, you can transform AI from a simple tool into a strategic asset that delivers lasting competitive advantage.

Ready to Stop Guessing and Start Building?

If you’re ready to transform your AI from a collection of features into a strategic asset, I can help your team build the systematic evaluation foundation that enables continuous improvement. My one-week intensive workshop focuses specifically on getting your team from Level 1 to Level 4 - building the instrumentation, analysis, and evaluation capabilities that unlock real AI maturity.

Schedule a free consultation to discuss your team’s current maturity level →

Or take the AI Maturity Model Assessment to see where you stand and get a personalized plan to improve your AI Maturity: